The first step in any professional campaign is to do some keyword research and analysis.

Somebody asked me about this a simple white hat tactic and I think what is probably the simplest thing anyone can do that guarantees results.

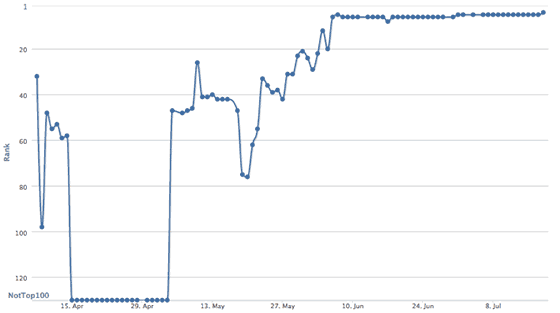

The chart above (from last year) illustrates a reasonably valuable 4 word term I noticed a page I had didn’t rank high in Google for, but I thought probably should and could rank for, with this simple technique.

I thought it as simple as an example to illustrate an aspect of onpage seo, or ‘rank modification’, that’s white hat, 100% Google friendly and never, ever going to cause you a problem with Google. This ‘trick’ works with any keyword phrase, on any site, with obvious differing results based on availability of competing pages in SERPS, and availability of content on your site.

The keyword phrase I am testing rankings for isn’t ON the page, and I did NOT add the key phrase…. or in incoming links, or using any technical tricks like redirects or any hidden technique, but as you can see from the chart, rankings seem to be going in the right direction.

You can profit from it if you know a little about how Google works (or seems to work, in many observations, over years, excluding when Google throws you a bone on synonyms. You can’t ever be 100% certain you know how Google works on any level, unless it’s data showing your wrong, of course.)

What did I do to rank number 1 from nowhere for that key phrase?

I added one keyword to to the page in plain text, because adding the actual ‘keyword phrase’ itself would have made my text read a bit keyword stuffed for other variations of the main term. It gets interesting if you do that to a lot of pages, and a lot of keyword phrases. The important thing is keyword research – and knowing which unique keywords to add.

This illustrates a key to ‘relevance’ is…. a keyword. The right keyword.

Yes – plenty of other things can be happening at the same time. It’s hard to identify EXACTLY why Google ranks pages all the time…but you can COUNT on other things happening and just get on with what you can see works for you.

In a time of light optimisation, it’s useful to earn a few terms you SHOULD rank for in simple ways that leave others wondering how you got it.

Of course you can still keyword stuff a page, or still spam your link profile – but it is ‘light’ optimisation I am genuinely interested in testing on this site – how to get more with less – I think that’s the key to not tripping Google’s aggressive algorithms.

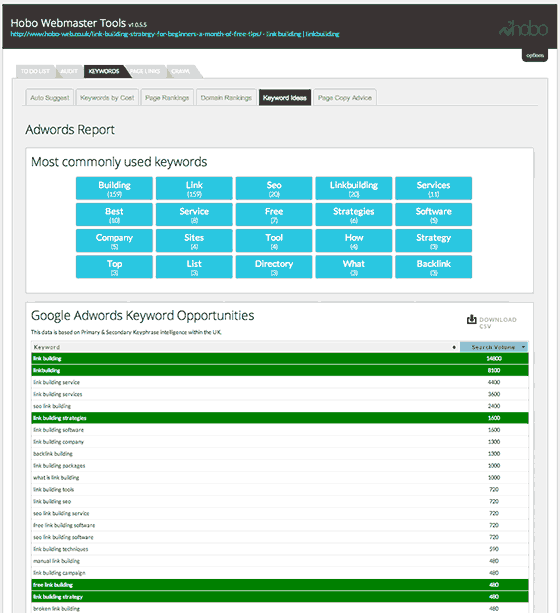

There are many tools on the web to help with basic keyword research (including the Google Keyword Planner tool and there are even more useful third party seo tools to help you do this).

You can use many keyword research tools to quickly identify opportunities to get more traffic to a page:

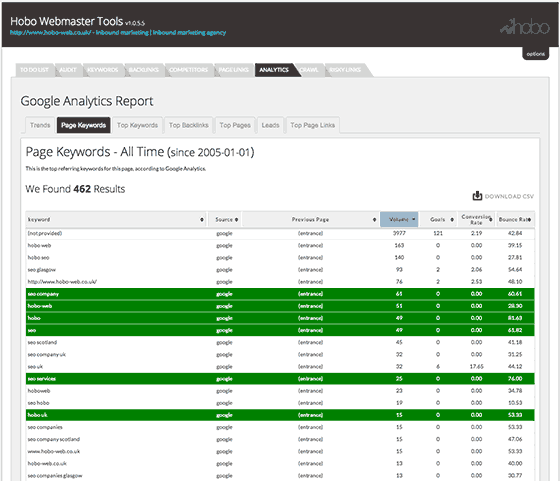

Google Analytics Keyword ‘Not Provided’

Google Analytics was the very best place to look at keyword opportunity for some (especially older) sites, but that all changed a few years back.

Google stopped telling us which keywords are sending traffic to our sites from the search engine back in October 2011, as part of privacy concerns for it’s users.

Google will now begin encrypting searches that people do by default, if they are logged into Google.com already through a secure connection. The change to SSL search also means that sites people visit after clicking on results at Google will no longer receive “referrer” data that reveals what those people searched for, except in the case of ads.

Google Analytics now instead displays – keyword “not provided“, instead.

In Google’s new system, referrer data will be blocked. This means site owners will begin to lose valuable data that they depend on, to understand how their sites are found through Google. They’ll still be able to tell that someone came from a Google search. They won’t, however, know what that search was. SearchEngineLand

You can still get some of this data if you sign up for Google Webmaster Tools (and you can combine this in Google Analytics) but the data even there is limited and often not entirely the most accurate. The keyword data can be useful though – and access to backlink data is essential these days.

If the website you are working on is an aged site – there’s probably a wealth of keyword data in Google Analytics:

This is another example of Google making ranking in organic listings HARDER – a change for ‘users’ that seems to have the most impact on ‘marketers’ outside of Google’s ecosystem – yes – search engine optimisers.

Now, consultants need to be page centric (abstract, I know), instead of just keyword centric when optimising a web page for Google. There are now plenty of third party tools that help when researching keywords but most of us miss the kind of keyword intelligence we used to have access to.

Proper keyword research is important because getting a site to the top of Google eventually comes down to your text content on a page and keywords in external & internal links. All together, Google uses these signals to determine where you rank if you rank at all.

There’s no magic bullet, to this.

At any one time, your site is probably feeling the influence of some sort of algorithmic filter (for example, Google Panda or Google Penguin) designed to keep spam sites under control and deliver relevant high quality results to human visitors.

One filter may be kicking in keeping a page down in the SERPS, while another filter is pushing another page up. You might have poor content but excellent incoming links, or vice versa. You might have very good content, but a very poor technical organisation of it.

Try and identify the reasons Google doesn’t ‘rate’ a particular page higher than the competition – the answer is usually on the page or in backlinks pointing to the page.

- Do you have too few inbound quality links?

- Do you have too many?

- Does your page lack descriptive keyword rich text?

- Are you keyword stuffing your text?

- Do you link out to irrelevant sites?

- Do you have too many advertisements above the fold?

- Do you have affiliate links on every page of your site, and text found on other websites?

Whatever they are, identify issues and fix them.

Get on the wrong side of Google and your site might well be flagged for MANUAL review – so optimise your site as if, one day, you will get that website review from a Google Web Spam reviewer.

The key to a successful campaign, I think, is persuading Google that your page is most relevant to any given search query. You do this by good unique keyword rich text content and getting “quality” links to that page. The latter is far easier to say these days than actually do!

Next time your developing a page, consider what looks spammy to you is probably spammy to Google. Ask yourself which pages on your site are really necessary. Which links are necessary? Which pages on the site are emphasised in the site architecture? Which pages would you ignore?

You can help a site along in any number of ways (including making sure your page titles and meta tags are unique) but be careful. Obvious evidence of ‘rank modifying’ is dangerous.

I prefer simple seo techniques, and ones that can be measured in some way. I have never just wanted to rank for competitive terms; I have always wanted to understand at least some of the reasons why a page ranked for these key phrases. I try to create a good user experience for for humans AND search engines. If you make high quality text content relevant and suitable for both these audiences, you’ll more than likely find success in organic listings and you might not ever need to get into the technical side of things, like redirects and search engine friendly urls.

To beat the competition in an industry where it’s difficult to attract quality links, you have to get more “technical” sometimes – and in some industries – you’ve traditionally needed to be 100% black hat to even get in the top 100 results of competitive, transactional searches.

There are no hard and fast rules to long term ranking success, other than developing quality websites with quality content and quality links pointing to it. The less domain authority you have, the more text you’re going to need. The aim is to build a satisfying website and build real authority!

You need to mix it up and learn from experience. Make mistakes and learn from them by observation. I’ve found getting penalised is a very good way to learn what not to do.

Remember there are exceptions to nearly every rule, and in an ever fluctuating landscape, and you probably have little chance determining exactly why you rank in search engines these days. I’ve been doing it for over 10 years and everyday I’m trying to better understand Google, to learn more and learn from others’ experiences.

It’s important not to obsess about granular ranking specifics that have little return on your investment, unless you really have the time to do so! THERE IS USUALLY SOMETHING MORE VALUABLE TO SPEND THAT TIME ON. That’s usually either good backlinks or great content.

0 comments:

Post a Comment